How to Use Visual Intelligence on iPhone 16: Translate, Search, and Ask ChatGPT

Get information about objects and places on your iPhone instantly.

Apple first introduced Visual Intelligence in iOS 18.2, where its functionality was initially limited to what your iPhone’s camera could see. However, starting with iOS 26, Visual Intelligence can now analyze both what your camera captures and anything displayed on your iPhone screen. This evolution transforms it into a powerful on-screen assistant capable of recognizing visuals, extracting text, enabling shopping, and interacting via ChatGPT.

With the basics out of the way, here’s everything you need to know about using Visual Intelligence on your iPhone.

What is Visual Intelligence?

Visual Intelligence is a cutting-edge Apple Intelligence-powered feature introduced with the iPhone 16 series. It requires at least an A17 Pro chip equipped with an advanced Neural Engine to process visual data entirely on-device—keeping things fast and private.

Using Visual Intelligence, you can recognize and extract information about objects, places, and even content on your iPhone screen. Just point your camera, and your iPhone does the rest—whether it’s identifying a plant, translating foreign text, finding a product online, or even asking ChatGPT follow-up questions.

Which iPhones Support Visual Intelligence?

Visual Intelligence is part of the Apple Intelligence suite, but it’s currently exclusive to the iPhone 16, 16 Plus, 16 Pro, and 16 Pro Max models. Although other Apple Intelligence features are available on the iPhone 15 Pro and later running iOS 18.2 or above, Visual Intelligence requires the Camera Control button, which is only present on the iPhone 16 lineup—for now.

How to Use Visual Intelligence on iPhone

From getting detailed info about your surroundings to translating languages or querying ChatGPT, Visual Intelligence can be your daily assistant. Let’s explore its powerful capabilities.

1. Get Information About Places and Businesses

Visual Intelligence can fetch details such as business hours, menus, contact info, and more. Here’s how:

- Point your iPhone 16’s camera toward the business and long press the Camera Control to invoke Visual Intelligence.

- Press the Camera Control again or tap the capture button on the display to analyze the business.

- Now, based on the business type, Visual Intelligence will suggest actions including but not limited to:

- View Schedule: To reveal the operational hours

- Order: For placing an order with the business

- Menu: To view the business’s menu or services

Besides these actions, you’ll also find options to call the business, view its website, and more.

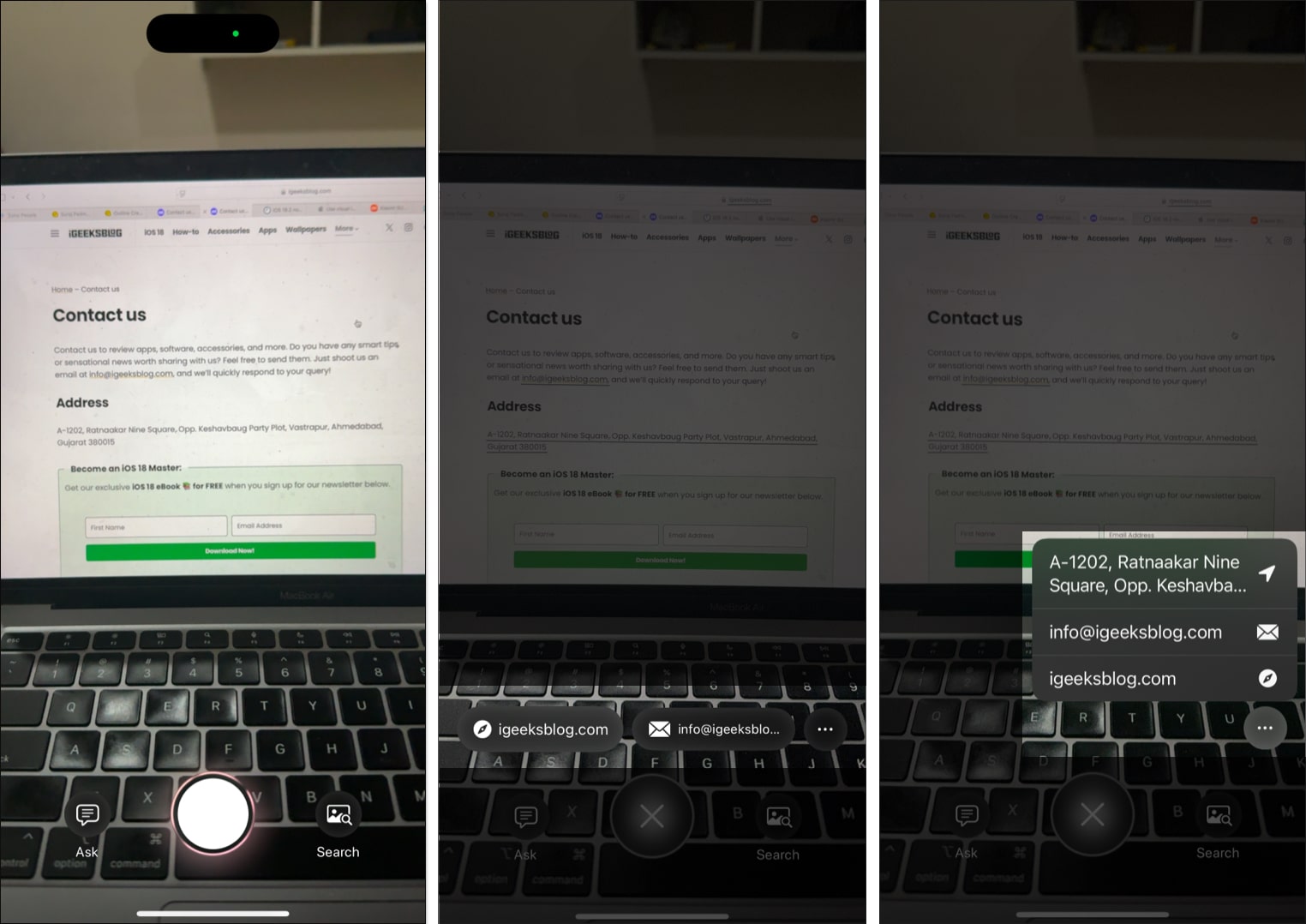

2. Interact with Text in Real Time

Visual Intelligence takes Live Text to the next level. Point your camera at any text—on a sign, page, or screen—and do things like:

- Point the camera toward the text and long-press the Camera Control to bring up Visual Intelligence.

- Press the Camera Control again or tap the capture button on the display to analyze the text.

- Depending on the text, Visual Intelligence will suggest actions such as summarizing, translating, reading aloud, visiting the website, calling the phone number, and more. Tap the one you prefer. For example, I used Visual Intelligence to summarize the text in the image below.

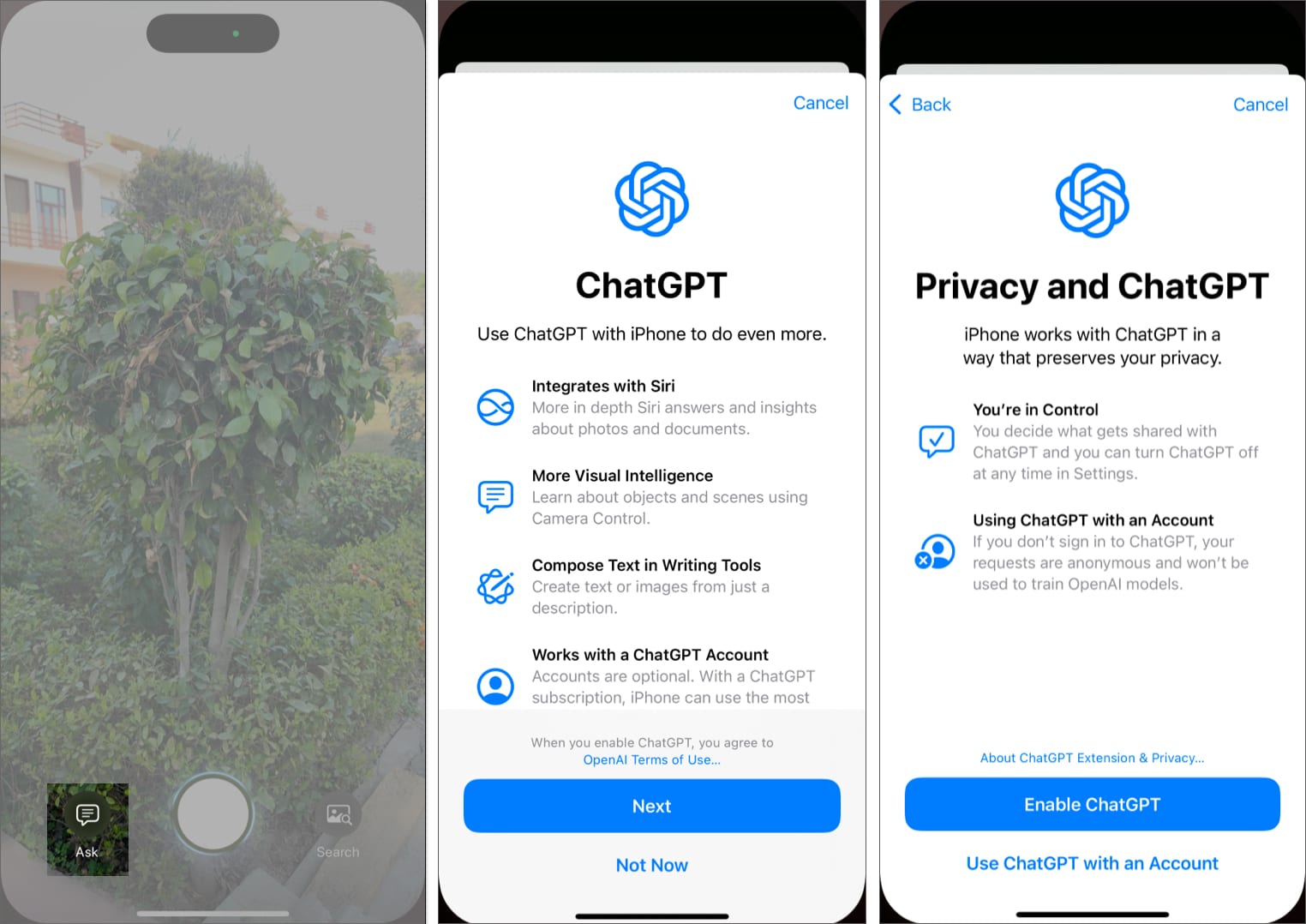

3. Ask ChatGPT for More Information

Thanks to ChatGPT integration in iOS 18+, you can use Visual Intelligence to ask deeper questions about whatever your camera sees.

- Frame the subject in your iPhone’s Camera app and tap the Ask button at the bottom.

- Grant ChatGPT the necessary permissions when prompted and tap Enable ChatGPT. You can choose to use ChatGPT with an account if you want. (You only need to do this the first time you use the ChatGPT integration with Visual Intelligence.)

- Give it a few seconds, and you’ll see the result for your search in a ChatGPT card at the top of the screen. You can also type or dictate a follow-up question in the text field below if you need further information.

- Tap the X button in the bottom-right corner to exit Visual Intelligence.

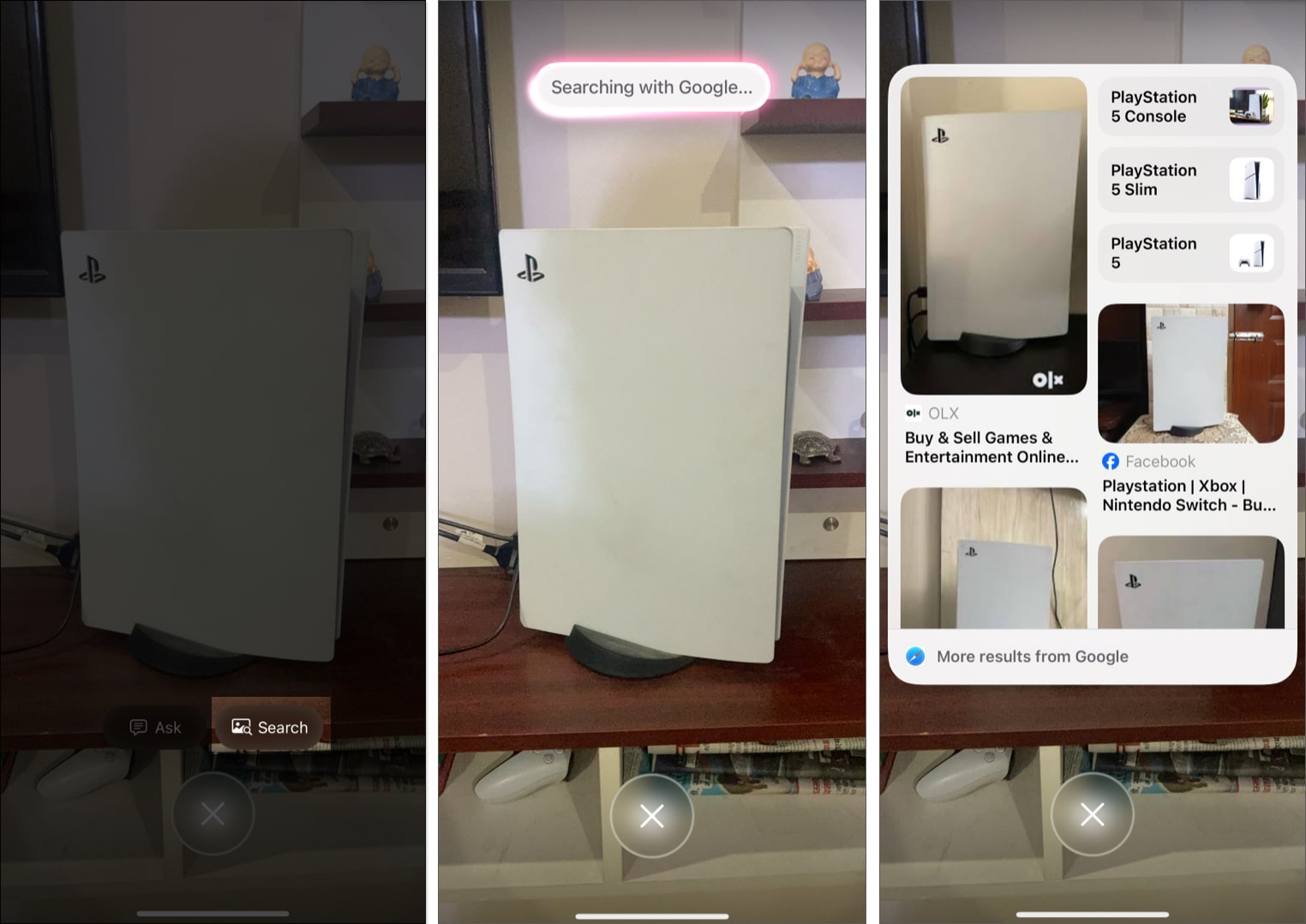

4. Search for Related Images on Google

Want to find visually similar items or products? You can search using Google directly from Visual Intelligence.

- Direct your iPhone’s camera toward the object and long-press the Camera Control to access Visual Intelligence.

- Tap the Search button in the bottom-right corner, and a Searching with Google dialog box will appear, followed by the search results.

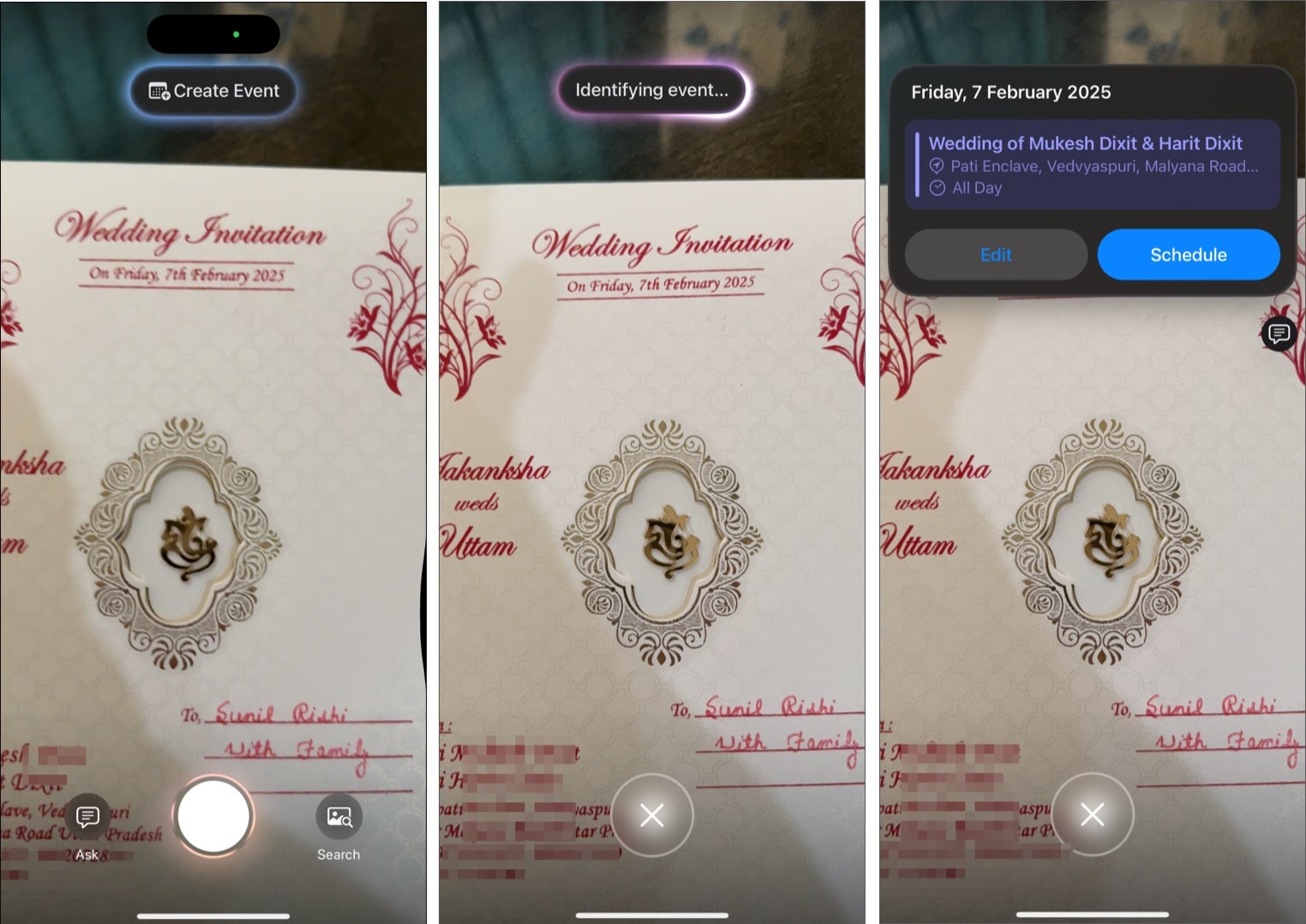

5. Add Events to Calendar from Posters or Flyers

Starting iOS 18.3 or later, you can use Visual Intelligence to create Calendar events simply by positioning your iPhone cameras towards a flyer or poster.

How to do it:

- Press & hold the Camera Control button to open Visual Intelligence.

- Position the iPhone cameras on a flyer or poster with a date.

- A Create Event button will appear at the top center; tap on it to let Visual Intelligence identify the event.

- Once identified, Visual Intelligence will prompt you to schedule the event; tap the Schedule button to complete calendar event creation.

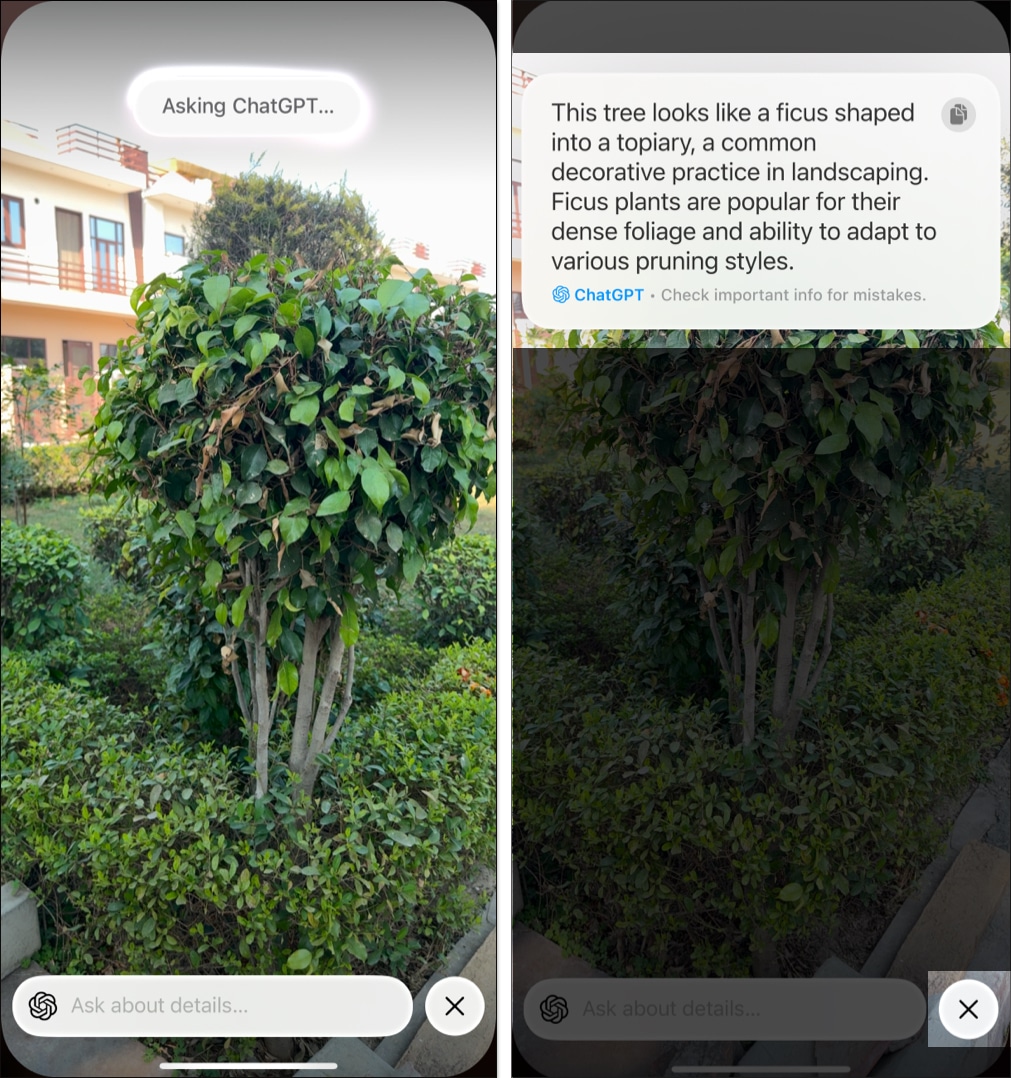

6. Instantly Identify a Plant or Animal

With iOS 18.3 or later, identifying flora and fauna is easier than ever.

- Open Visual Intelligence and position the iPhone camera towards the subject (plant or animal) you want to identify.

- Visual Intelligence will automatically identify the subject and display its name at the top center.

- Tap on the subject’s name to get more information.

How to Use Visual Intelligence via the Action Button

Previously exclusive to the Camera Control button, Visual Intelligence is now accessible via the Action Button on iPhones that support Apple Intelligence but lack the Camera Control—like the iPhone 15 Pro, 15 Pro Max, and iPhone 16e, thanks to iOS 18.4.

Customize Action Button for Visual Intelligence

- Open Settings and head to the Action Button settings.

- Here, swipe right or left until the Visual Intelligence appears.

Use Visual Intelligence via Action Button

Once configured, long-press the Action Button to invoke Visual Intelligence and start using it just like on the iPhone 16 series.

What’s New with Visual Intelligence in iOS 26

iOS 26 brings an innovative update: Visual Intelligence now works with screenshots. You can circle parts of a screenshot to query ChatGPT or search online instantly.

- Take a screenshot by pressing the Side button + Volume button.

- Now, use your finger to circle the part of the image you’re interested in. A new Siri-style glow animation will appear around the circled part.

- Tap the Ask button and type a question, or tap the Image Search button or swipe up to reverse image search on the internet.

Use this to compare product prices, learn more about images, or simply satisfy your curiosity.

Unlock the Full Potential of Visual Intelligence

Visual Intelligence transforms how you interact with your iPhone’s camera and screen. Whether you’re looking up a mysterious plant, translating signs, or using AI to dig deeper into your surroundings, it’s a powerful new way to connect the physical world to your digital one.

Are you using Visual Intelligence on your iPhone 16? What’s your favorite feature so far? Let us know in the comments!

Also read:

- How to use Clean Up to remove objects from photos

- How to create a memory movie with Apple Intelligence on your iPhone

- How to use Photographic Styles on iPhone 16 series